Alvin Lang

Jul 21, 2024 04:57

LangChain explores the limitations and future of planning for agents with LLMs, highlighting cognitive architectures and current fixes.

According to a recent LangChain Blog post, planning for agents remains a critical challenge for developers working with large language models (LLMs). The article delves into the intricacies of planning and reasoning, current fixes, and future expectations for agent planning.

What Exactly Is Meant by Planning and Reasoning?

Planning and reasoning by an agent involve the LLM’s ability to decide on a series of actions based on available information. This includes both short-term and long-term steps. The LLM evaluates all available data and decides on the first step it should take immediately, followed by subsequent actions.

Most developers use function calling to enable LLMs to choose actions. Function calling, first introduced by OpenAI in June 2023, allows developers to provide JSON schemas for different functions, enabling the LLM to match its output with these schemas. While function calling helps in immediate actions, long-term planning remains a significant challenge due to the need for the LLM to think about a longer time horizon while managing short-term actions.

Current Fixes to Improve Planning by Agents

One of the simplest fixes is ensuring the LLM has all the necessary information to reason and plan appropriately. Often, the prompt passed into the LLM lacks sufficient information for reasonable decision-making. Adding a retrieval step or clarifying prompt instructions can significantly improve outcomes.

Another recommendation is changing the cognitive architecture of the application. Cognitive architectures can be categorized into general-purpose and domain-specific architectures. General-purpose architectures, like the “plan and solve” and Reflexion architectures, provide a generic approach to better reasoning. However, these may be too general for practical use, leading to the preference for domain-specific cognitive architectures.

General Purpose vs. Domain Specific Cognitive Architectures

General-purpose cognitive architectures aim to improve reasoning generically and can be applied to any task. For example, the “plan and solve” architecture involves planning first and then executing each step. The Reflexion architecture includes a reflection step after task completion to evaluate correctness.

Domain-specific cognitive architectures, on the other hand, are tailored to specific tasks. These often include domain-specific classification, routing, and verification steps. The AlphaCodium paper demonstrates this with a flow engineering approach, specifying steps like coming up with tests, then a solution, and iterating on more tests. This method is highly specific to the problem at hand and may not be applicable to other tasks.

Why Are Domain Specific Cognitive Architectures So Helpful?

Domain-specific cognitive architectures help by providing explicit instructions, either through prompt instructions or hardcoded transitions in code. This method effectively removes some planning responsibilities from the LLM, allowing engineers to handle the planning aspect. For instance, in the AlphaCodium example, the steps are predefined, guiding the LLM through the process.

Nearly all advanced agents in production utilize highly domain-specific and custom cognitive architectures. LangChain makes building these custom architectures easier with LangGraph, designed for high controllability, which is essential for creating reliable custom cognitive architectures.

The Future of Planning and Reasoning

The LLM space has been evolving rapidly, and this trend is expected to continue. General-purpose reasoning is likely to become more integrated into the model layer, making models more intelligent and capable of handling larger contexts. However, there will always be a need to communicate specific instructions to the agent, whether through prompting or custom cognitive architectures.

LangChain remains optimistic about the future of LangGraph, believing that as LLMs improve, the need for custom architectures will persist, especially for task-specific agents. The company is committed to enhancing the controllability and reliability of these architectures.

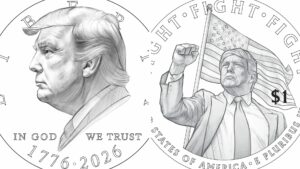

Image source: Shutterstock